NY MRT DSRIP Evaluation Design

- Evaluation Design also available in Portable Document Format (PDF)

Centers for Medicare & Medicaid Services

7500 Security Boulevard, Mail Stop S2–01–16

Baltimore, Maryland 21244–1850

State Demonstrations Group

March 13, 2018

Mr. Jason HelgersonDirector

Office of Health Insurance Programs

New York State Department of Health

Empire State Plaza

Corning Tower (OCP–1211)

Albany, NY 12237

Dear Mr. Helgerson:

The Centers for Medicare & Medicaid Services (CMS) has completed its review of the Delivery System Reform Incentive Payment (DSRIP) Program evaluation design for New York´s section 1115(a) demonstration (Project No. l 1–W–00304/0), entitled "Medicaid Redesign Team" (MRT). We have determined that the submission dated March 9, 2018 meets the requirements set forth in the Special Terms and Conditions and hereby approve the MRT´s DSRIP evaluation design.

If you have any questions, please do not hesitate to contact your project officer, Mr. Adam Goldman. Mr. Goldman can be reached at (410) 786–2242, or at Adam.Goldman@cms.hhs.gov. We look forward to continuing to partner with you and your staff on the MRT demonstration.

Sincerely,

Angela D. Garner

Director, Division of System Reform Demonstrations

Enclosure

cc:

Michael Melendez, Associate Regional Administrator, CMS New York Region

Independent Evaluation of the New York State Delivery System Reform Incentive Payment Program

Updated: March 9, 2018

Table of Contents:

Section A:

Background/Introduction

A.1 Delivery System Reform Incentive Payment Program:

The New York State Delivery System Reform Incentive Payment (DSRIP) Program is the main mechanism by which New York is implementing the Medicaid Redesign Team (MRT) Waiver Amendment. DSRIP´s purpose is to fundamentally restructure the health care delivery system by investing in the Medicaid program, with the primary goal of reducing avoidable hospital use by 25% over five years. Up to $6.42 billion is allocated to this program with payouts based upon achieving predefined results in system transformation, clinical management and population health. DSRIP provides incentives for Medicaid providers to create and sustain an integrated, high performing health care delivery system that can effectively and efficiently meet the needs of Medicaid beneficiaries and low income uninsured individuals in their local communities by achieving the MRT Triple Aim of improving care, improving health, and reducing costs.

Through DSRIP, the New York State Department of Health (NYSDOH) seeks to transform the health care safety net, reduce avoidable hospital use and make improvements in other health and public health measures at the system and state level, and ensure sustainability of delivery system transformation through leveraging managed care payment reform. DSRIP provides incentive payments to reward safety net providers that undertake projects designed to transform systems of care supporting Medicaid beneficiaries and low income, uninsured persons by addressing three key elements: safety net system transformation; appropriate infrastructure; and assuming responsibility for a defined population. Safety net providers who collectively participate in DSRIP are referred to as the 25 regional Performing Provider Systems (PPS).

A.2 DSRIP Evaluation:

An Independent Evaluator (IE), the Research Foundation of the State University of New York (SUNY), is implementing a multi–method, robust statewide evaluation of the DSRIP Program. The evaluation employs quantitative and qualitative methods in order to achieve a robust evaluation of DSRIP, and will achieve the following goals:

- Assess program effectiveness on a statewide level with respect to the MRT Triple Aim;

- Obtain information on the effectiveness of specific projects and strategies selected and the factors associated with program success; and

- Obtain feedback from stakeholders including NYSDOH staff, PPS administrators and providers, and Medicaid beneficiaries served under DSRIP regarding the planning and implementation of the DSRIP Program, and on the health care service experience under DSRIP reforms. Evaluation results will be regularly reported to NYSDOH, the PPS and the Centers for Medicare and Medicaid Services (CMS).

A.3 DSRIP Special Terms and Conditions:

The evaluation will be consistent with the specifications outlined in the DSRIP Special Terms and Conditions (STC), Sections VIII.21 through VIII.33, as outlined in Attachment 1.

A.4 IE Performance Standards/Expectations:

The IE will address the following overarching Research Questions (RQs):

- To what extent did PPS achieve health care system transformation?

- Did health care quality improve as a result of clinical improvements in the treatment of selected diseases and conditions?

- Did population health improve as a result of implementation of the DSRIP initiative?

- Did utilization of behavioral health care services increase as a result of DSRIP?

- Was avoidable hospital use reduced as a result of DSRIP?

- Did DSRIP reduce health care costs?

- What were the successes and challenges with respect to PPS planning, implementation, operation and plans for program sustainability from the perspectives of DSRIP planners, administrators and providers, and why were they successful and challenging?

A.5 Measures and Available Data:

A set of measures described in the "DSRIP Strategies Menu and Metrics" will be used to quantify facets of system transformation (Domain 2), quality of care through clinical improvements (Domain 3), and population health (Domain 4) using existing data sources, described below. Though the IE is not limited to the use of these measures in their evaluation, they may be used for purposes of the DSRIP evaluation in assessing statewide outcomes. The majority of these measures are well established with known measurement stewards (e.g., 3M, AHRQ) and are commonly used in health care quality improvement activities.

Regardless of outcome measures, the IE has access to a number of existing data sources that are maintained by, or are available to, NYSDOH. Given public health law and/or data use agreements that govern access to these data, the IE is aware that obtaining access may require substantial time and effort, which is a consideration of their evaluation timeline.

- Medicaid Claims – This database contains billing records for health care services, including pharmacy, for approximately 5.7 million individuals enrolled in Medicaid in a given year. Also included are data on Medicaid enrollment status, diagnoses and provider associated with the billed services. The Medicaid claims database is updated on a monthly basis to include additional claims and modifications to existing claims. Given the claims processing, there is a six–month lag in the availability of complete and finalized Medicaid claims data, where data for a given year are considered final by June 30 of the following year.

- Medicare Claims – For approximately 15% of Medicaid enrollees who are dually eligible for Medicare, Medicare claims will be used to ensure data completeness, as many of the services received by this group will be paid by Medicare and thus not appear in the Medicaid database. Medicare claims contains billing records for health care services, including pharmacy services, along with data on diagnoses and provider information. NYSDOH is working with an external entity specializing in the linking of Medicaid and Medicare claims data which will ensure timely access to Medicare claims through monthly data updates.

- Statewide Planning and Research Cooperative System (SPARCS) – SPARCS is an all payer data reporting system established in 1979 as a result of cooperation between the health care industry and government. Initially created to collect information on discharges from hospitals, SPARCS currently collects patient level detail on patient characteristics, diagnoses and treatments, services, and charges for inpatient and outpatient (ambulatory surgery, emergency department, and outpatient services), hospital services and outpatient services from free–standing ambulatory surgery centers. SPARCS data may be used for medical or scientific research or statistical or epidemiological purposes. All entities seeking SPARCS identifiable or limited data must submit a request to SPARCS Operations using standard data request forms. Finalized SPARCS data for a given year are available in August of the following year.

- Minimum Data Set (MDS) – MDS 2.0 and 3.0 data consist of federally mandated assessments collected at regular intervals on all nursing home residents in New York. Assessment data collected include diseases and conditions, nutritional status, resident physical and cognitive functioning (e.g., activities of daily living), medications received, and nursing home admission source and discharge disposition. These data have been shown to be adequately reliable and are widely used in research, and are available to NYSDOH under data use agreement with CMS. There is, approximately, a six–month lag in the availability of complete MDS data, where finalized data for a given year are available in June of the following year.

- Consumer Assessment of Healthcare Providers and Systems (CAHPS®) – The Clinician & Group version of the CAHPS® survey will be administered by NYSDOH annually during the DSRIP demonstration period and will serve as the data source for selected outcome measures. The survey is administered by both mail and telephone, and assesses patients´ experiences with health care providers and office staff. This includes information on patient experience over the last 12 months including most recent visit to provider, ease of getting an appointment, and wait times while in the office. The survey includes standardized questionnaires for adults and children. The adult questionnaire can be used in both primary care and specialty care settings; the child questionnaire is designed for primary care settings, but could be adapted for specialty care. Users can also add supplemental items to customize their questionnaires. Surveys are administered in September of a given year, and are available for use in February of the following year. Given confidentiality agreements, only de–identified CAHPS data will be available for use.

- New York Vital Statistics – Birth and death certificate data are maintained by New York, with New York City Department of Health and Mental Hygiene and NYSDOH comprising two separate jurisdictions in the reporting of birth and death records, which will likely necessitate separate data use agreements. NYSDOH has the responsibility for annual statewide reporting of vital statistics governed by the terms of a memorandum of understanding between the two jurisdictions. Birth records contain information such as maternal medical risk factors, prenatal care received, infant birth date, birth weight, and infant diseases/conditions including congenital malformations. Death certificate data include date of death, underlying and multiple cause of death, decedent demographics, county of residence, and county of death. While Vital Statistics data are received by NYSDOH on an ongoing basis, due to the process of updating and finalizing information from birth and death certificates (e.g., due to delayed receipt of lab results), data for a given year are not considered complete until the end of the following year.

- Expanded Behavioral Risk Factor Surveillance System (eBRFSS) – eBRFSS augments the Centers for Disease Control and Prevention (CDC) BRFSS, which is conducted annually in New York. eBRFSS is a random–digit–dialed telephone survey among adults 18 years of age and older representative of the non–institutionalized civilian population with landline telephones or cell phones living in New York. The goal of eBRFSS is to collect county–specific data on preventive health practices, risk behaviors, injuries and preventable chronic and infectious diseases. Topics assessed by the eBRFSS include tobacco use, physical inactivity, diet, use of cancer screening services, and other factors linked to the leading causes of morbidity and mortality. The 2013–14 eBRFSS survey will be used as the baseline for DSRIP for measures derived from these data, and contains a question to identify Medicaid respondents. Repeat eBRFSS surveys to be used in support of the DSRIP evaluation will be conducted in 2016–17, and again in 2019–20.

- New York HIV/AIDS Case Surveillance Registry – This registry contains information on new cases of HIV and AIDS, as well as persons living with HIV or AIDS. Data include date of diagnosis, HIV exposure category, county of residence at diagnosis, and whether or not diagnosis was made while individual was incarcerated.

- Uniform Assessment System (UAS) – UAS contains assessment data on individuals receiving home or community–based long term care (e.g., adult day health care, long term home health care). Data include patient functional status, health status, cognitive functioning, and care preferences.

- US Census – These data are publicly available from the United States (US) Census Bureau, and contain estimates of population size, and data on population characteristics. The latter include housing status, income, employment status, educational level, and health insurance coverage. US census data are gathered on an ongoing basis from a number of surveys including the Decennial Census, the American Community Survey, and the Economic Census.

- Medical Record – Measures that are derived from medical records will be reported by PPS, or their participating providers.

- Medicaid and Medicare Claims – These data, as well as SPARCS data, are available from the Office of Health Insurance Programs (OHIP) Data Mart. Implemented in 1998, the OHIP Data Mart serves as a data repository to support analytical reporting and applications for NYSDOH, the Office of the Medical Inspector General, and the Office of the Attorney General. It supports analytics and ad hoc user queries, and supports a number of projects including Medicaid Claims History, the Medicaid Drug Rebate Application, and MRT Performance Analytics.

The IE will use a mixed methods strategy to meet the project objectives. This strategy offsets the weaknesses inherent in single method approaches and allows them to confirm, cross–validate, and corroborate the findings (Creswell, et al., 2003; Teddlie and Yu, 2007). See Sections B, C and D for more detailed explanations and strategy rationales.

A.6 Study Populations and Sample Sizes:

In November 2017, NYSDOH responded to CMS´s request to present a sampling strategy that explains how the 25 distinct PPS offer a distinct form of the intervention and the intensity of the intervention is expected to vary greatly across the state. CMS suggested the plan provide details on how the treatment and comparison groups will be identified in each data source and what identifiers will be used to match records across sources (e.g., SSN, Medicaid ID, name and address, provider numbers). CMS further stated that the plan should also document the ability to identify attributed or served patients and include a discussion of challenges in obtaining and integrating data and strategies for overcoming them. NYSDOH responded that the IE will receive information needed to identify members attributed to each PPS, and members not attributed to any PPS. The IE has further specified that they will create exact matches using available data on social security number, date of birth, and name across non–Medicaid datasets identified in this evaluation plan and will incorporate the whole population in their analysis.

Other methods for matching will be utilized (e.g., propensity score matching) as needed based on availability and reliability of the measures. Their analysis will be carried out at different levels of the population (aggregated view of impact at the state level, then at the PPS and intra–PPS level, and finally at a more individual level). Any issues with sample selection and missing data will be resolved using statistical methods (e.g., Heckman correction, MICE imputations). After data cleaning, the research hypothesis may be tested by changing the control and treatment groups; the IE has further specified that they will explore other options for exact matching or propensity score matching, and performing sensitivity analysis.

NYSDOH also responded to CMS´s suggestion that the evaluation include a plan for assessing the use of DSRIP funds for non–Medicaid populations (e.g., the uninsured to be included in the PPS implementing the "11th project.") CMS stated that if these funds represent a significant share of DSRIP funding, the design should include a sampling strategy documenting how this population is served and samples sufficient to estimate the impacts/benefits. Initially, NYSDOH stated that assessing the use of DSRIP funds for non–Medicaid populations such as the uninsured is outside of the scope of the evaluation (IE contract) and that a (contract) amendment would not be feasible with the timely submission of the Draft Interim Evaluation Report and Preliminary Summative Evaluation Report.

This has been revisited by the IE. SPARCS data would be able to be utilized to determine, in a limited capacity, utilization patterns of the uninsured in the inpatient and emergency department settings based upon the patient discharge dataset since those beneficiaries will not appear in the Medicaid claims. To examine whether the uninsured for the 14 PPS that are participating in the 11th Project are representative of the larger DSRIP population, the IE will examine hospital discharge records of the uninsured and compare the 14 PPS to the remainder.

|top of Section A.| |top of page|Section B:

Time Series Design:

As stated in Attachment 1 regarding the STC, quantitative analysis to assess the effect of DSRIP on a statewide level will use a time series approach to the comparison of health outcomes following the implementation of DSRIP, to a time period prior to DSRIP´s implementation.

B.1 Using this approach, the IE will test the following hypotheses:

- Health care service delivery will show greater integration.

- Health care coordination will improve.

- Primary care utilization will show a greater upward trend.

- Expenditures for primary care services will increase.

- Utilization of, and expenditures for, behavioral health care service will increase.

- Expenditures for emergency department and inpatient services will decrease.

- Primary care, behavioral health, and dental service utilization will increase among the uninsured, non–utilizing, and low–utilizing populations, while emergency department use will decrease.

- Through clinical improvements implemented under DSRIP, health care quality in each of the following areas will increase:

- Behavioral health

- Cardiovascular health

- Diabetes care

- Asthma

- HIV/AIDS

- Perinatal care

- Palliative care

- Renal care

- Population health measures will show improvements in the following four areas:

- Mental health and substance abuse

- Prevention of chronic diseases

- Prevention of HIV and STDs

- Health of women, infants, and children

- Avoidable hospital use will be reduced.

- Costs associated with hospital inpatient and ED services will show reductions or slowed growth.

- Total cost of care will show reductions or slowed growth.

The IE will emphasize comparison of health care service delivery, health improvements, and cost to the Medicaid program at the state level over the study period. They will also do an inter–PPS analysis to identify components that posed success or challenges for implementation and outcomes by difference–in–differences (DID) analysis. Possible improvement in 12 broad categories of health care under four (4) domains is envisioned.

The IE will use the interrupted time series design with segmented regression on the following statewide times series to evaluate the statewide impact of DSRIP with quarterly observations ending in April 2019. Using the dataset starting from 2005 and defining 2014 as DSRIP Year 0, the IE will have 10 years of pre–DSRIP data to control for existing trends in performance measures due to concurrent health care reforms, both nationally and statewide. The IE will examine if post DSRIP values are better than those of the pre–DSRIP period from the standpoint of utilization, spending, and change in outcome measures when compared to the newly designed Medicaid program.

Even though the IE will use the interrupted time series (ITS) design as the main component of their analysis, the ITS assumes that, without the intervention, trends in the outcome are not affected. The ITS design does not require the use of a comparison group, but is limited in controlling for external shocks (i.e., Medicaid expansion, individual mandate, overall changes in medical practice). This motivates the IE to explore if an appropriate non–DSRIP control group of patients (using propensity score or exact matching) or a comparison group of non–DSRIP providers (using cluster analysis to find similar hospital sites) can be identified for conducting DID analysis using time series and panel data. The IE realizes that a non–Medicaid population as a control group will be hard to identify because it would likely differ in many ways from the Medicaid population in terms of socio–demographic, and more importantly, by health. It will experiment with creating these comparison groups from the non–Medicaid population by matching all payer SPARCS data with DSRIP network information for at least a subset of its research questions. In addition, the IE will use a full–scope, Medicaid–enrolled, non–DSRIP attributed population as a control if the data are available. For research questions pertaining to performance in specific projects, PPS not selecting the project can also be used as a control group. The DID estimator only requires that in the absence of the treatment, the average outcomes of the treated and control groups would have followed parallel paths over time and that responses to "common shocks" (i.e., Medicaid expansion, individual mandate, overall changes in medical practice) are similar. Even this assumption may not be reasonable because the pre– treatment characteristics may be associated with the dynamics of the outcome variable that can affect the control and treatment groups asymmetrically. In this situation, the IE will experiment with Abadie´s (2005) simple two–step semi–parametric strategy to estimate the average treatment effect of the treated. These methods will have to be corrected for serial correlation in the outcome variable by using appropriate cluster analysis. The IE plans to experiment with the aforementioned ideas during the current year and use statistical tests to decide whether a comparison group can be identified for each of its research questions. For those questions where a suitable comparison group could not be identified, the IE will use the ITS to study the effect of DSRIP. These results will be reported in the "2019 Statewide Annual Report" and the "CMS 2019 Interim Evaluation Report."

B.2 Research Questions

| Research Question | Hypotheses |

|---|---|

|

|

|

Through clinical improvements implemented under DSRIP, health care quality in each of the following areas will increase:

|

|

|

|

Utilization of, and expenditures for, behavioral health care service will increase. |

|

|

|

Health care expenditures associated with services under DSRIP will show a reduction or slowed growth |

|

This RQ is not applicable to the Time Series Analysis. See Section C. |

The IE will consider two possible "comparisons." One is a patient–level control group made up of Medicaid beneficiaries who were not exposed to any PPS intervention for a certain amount of time, so they are direct controls for the intervention group of patients. The IE can match using propensity scores from Medicaid enrollment and claims, plus geography if possible. There is another "comparison" that is at the hospital level; which is hospitals that did not participate in the DSRIP, but have similar characteristics. This may be a challenge or actually be impossible to identify, because most safety net hospitals in New York are in a PPS. In that case, the IE would use the average rates of hospitalizations, Medicaid spending, ER visits, etc. and compare those hospital–level outcomes from the comparison hospitals to the PPS hospitals. In both cases, the IE could use DID, Time Series, or ITS. There are limitations for each. In DID, the IE is relying on two assumptions: 1) parallel trends, and 2) common shocks external to the intervention. In ITS, the IE is assuming that pre–intervention trends continue. The IE does not know which is true at this point because the IE does not have the data, so they will assess the utility of both methodologies. The IE can do DID with propensity score matching with patient level data, because there are enough non–PPS patients to find matches. DID with hospital comparison is tougher, because there will not be a good match one–to–one with PPS hospitals. Finally, DID analysis with another state is not feasible. It would not be within the scope of the IE contract for the IE to perform DID with comparisons from a non–DSRIP state.

As described in Section B.1, during the current year, the IE will explore creating a control group of non–DSRIP patients and a comparison group of non–DSRIP hospitals and assess whether it is feasible to use them given that DSRIP is so far reaching. Most Medicaid beneficiaries are receiving care and being exposed to PPS even if not technically attributed under the 50% threshold.

The Time Series Analysis will use a "global" comparison group to develop a state–wide control group of hospitals.

Additional information regarding the above Rqs:

Sub research questions were added or expanded for those noted below but others are not possible within the current scope of the contract.

RQ3. Also, racial and ethnic disparities will be addressed with respect to the following metrics: premature deaths, newly diagnosed cases of HIV, preterm births, adolescent pregnancy rate per 1,000 females aged 15–17, percentage of unintended pregnancy among live births, and infants exclusively breastfed while in the hospital. Disparities on these outcomes will be measured as ratios and will be treated as additional outcomes at the statewide level with the prediction that these ratios will show improvement (i.e., will be reduced) following DSRIP implementation.

RQ6. It is hypothesized that following the introduction of DSRIP, the health care of the Medicaid patients has become better and also the program has become economically more efficient. Due to small sample size and multiple hypotheses testing, correct significance levels have to be determined by controlling the false discovery rate (FDR), rather by conventional Bonferroni bounds.

Supplemental RQ: Was DSRIP cost effective in terms of New York State and federal governments receiving adequate value for their investments?

A set of measures described in the "DSRIP Strategies Menu and Metrics" will be used to quantify the performance measures. Because a large number of hypotheses will be tested, the problem of the inflated type I error will be mitigated by replacing the conventional Bonferroni methods with the control of the false discovery rate (FDR), defined as the expected proportion of errors (i.e., null hypotheses that are actually true) among a set of null hypotheses that have been rejected. In addition, a comparative analysis will be conducted for efficiency and effectiveness based on the chosen projects on alternative domains using a DID methodology.

B.3 Determination of Cost Effectiveness:

Cost–effectiveness analysis, in the simplest terms, calculates the ratio of the amount of "effect" a program achieves for a given amount of cost or investment in the program incurred, or conversely, the amount of cost required to achieve a given impact. For program evaluation such as the DSRIP evaluation, this means measuring the impact of a program on achieving a given policy goal (e.g., the additional reduction in avoidable hospital use as a result of DSRIP against the cost of the program). This ratio, when calculated for a range of alternative programs addressing the same policy goal, conveys relative impacts and costs of these programs in an easily understandable and intuitive way.

The value of cost–effectiveness analysis is two–fold: first, its ability to summarize a complex program in terms of an illustrative ratio of effects to costs, and second, the ability to use this common measure to compare multiple programs evaluated in different contexts and in different years. The first requires technical correctness with respect to the program´s actual costs of administration of the program and impacts as they were evaluated, while the second requires adherence to a common methodology for estimating costs and effectiveness across various programs. For cost–effectiveness analysis to be a useful tool, it is necessary to agree on an outcome measure that would be the key objective of many different programs and policymakers. In this evaluation, there are two obvious contenders: the reduction in avoidable hospital readmissions - a goal of the DSRIP intervention, and the improvement in health outcomes for the population-a public health goal. Since this is a summative evaluation method, the entire pre–post DSRIP time horizon will be taken for the analysis. The DSRIP policy is compared to a baseline policy of do–nothing, or status quo-traditional Medicaid for New York State. The incremental costs of each life–year gained or of hospital readmissions of the traditional and DSRIP Medicaid programs will be calculated, and the incremental cost per life year gained for each scenario will then be elicited. Sensitivity analysis will be conducted to assess the robustness of the results due to other policy changes in the system or a change in case–mix of the beneficiaries. The uncertainty surrounding the effectiveness of the program to reduce hospital admissions and readmissions and to improve life years gained, and their impact on total costs per life year gained or reduction in hospital readmissions, will be calculated using the minimum and maximum effectiveness values from the literature review currently in process by the IE concerning these outcomes.

The Time–Series component of the evaluation will focus on the macro–level cost–effectiveness analysis with the counterfactual being addressed in the pre–post DSRIP comparison. The comparative component will focus on the variations among PPS in the achievement of the effects noted above among the various programs and projects initiated across the State under the DSRIP program. Working closely with the NYSDOH in order to determine yearly costs of administering the Medicaid program in New York State prior to, and after the DSRIP incentive program, these costs will be compared to the yearly measures pre– and post–DSRIP in avoidable hospital readmissions and health outcomes such as life years gained. Cost effectiveness thresholds will be determined with the NYSDOH prior to the cost effectiveness evaluation and sensitivity analysis will be performed given that there are many health policy changes that affect the Medicaid population during this period of the DSRIP intervention as well as some provider changes within DSRIP. The complexity of this analysis will depend on the type and richness of the data acquired from the Assessor and NYSDOH. This macro–level analysis builds on findings at the state level of RQs 3 through 5. Since this is a pre–post comparison of costs and effects at the macro or PPS–level of analysis, the measures will be discounted for time value and adjusted for uncertainty and risk–attitude as noted above. Further, marginal cost–effectiveness will be calculated since the programs reflect an on–going decision–making process.

Challenges for the Cost–effectiveness Analysis

There are obstacles to obtaining the cost–effectiveness determinations, which include difficulties in obtaining costs of the DSRIP intervention by PPS or over time as the PPS learn about the best methods to deliver their project workflows to the targeted population. In order to mitigate this issue, sensitivity analysis will be performed in order to determine the robustness of the outcomes over time and for various policies that simultaneously affect the Medicaid population over the period considered.

|top of Section B.| |top of page|Section C:

Qualitative Analysis:

Qualitative information obtained from DSRIP planners, administrators, providers, and beneficiaries is expected to play a vital role in the DSRIP evaluation. The IE´s qualitative methods will:

- Identify facilitators and barriers to PPS achieving progress on pay–for–reporting/pay–for– performance metrics using feedback from PPS administrators, providers, and patients, as well as to identify these issues that are characteristic of particular strategies or projects.

- Conduct PPS case study evaluation by obtaining information from DSRIP stakeholders on an ongoing basis on program planning, implementation, operation, and effectiveness to guide quality improvement through project refinements and enhancements.

Qualitative methods to be used include key informant interviews, focus groups, and surveys, with issues to be investigated qualitatively to include notable program outcomes and challenges, effectiveness of governance structure and provider linkages, contractual and financial arrangements, challenges in the delivery of patient care, the effect of other ongoing health care initiatives (e.g., New York Prevention Agenda, Affordable Care Act) on DSRIP implementation and operation, and patient experience and satisfaction with services. In the qualitative component of the evaluation, the IE will develop qualitative instruments to address the central evaluation questions and to augment results of quantitative analysis. This will include the determination of interview or survey questions with appropriate review and pre–testing to ensure that questions are comprehensive, understandable, and reliable, a plan and schedule for data collection, and a plan for analysis.

The IE´s qualitative data collection will be designed to address the RQs, objectives, and aims presented in several of the main research questions, the broad objectives and issues to be addressed in this section. Qualitative data will provide context for the quantitative questions assessing RQ 1–4, which focus on system transformation, clinical improvement, and population wide projects (Domains 2–4). These questions focus on the implementation of projects initiated with the DSRIP program. Qualitative data will also address RQ 7, which asks about successes and challenges related to different aspects of the DSRIP program.

Gaining an understanding of these RQs, aims, and objectives will provide integral information on the implementation and operation of DSRIP, the successes and challenges of PPS and projects within DSRIP, and guidance on sustaining programming going forward.

The IE will use four major data sources to collect qualitative information from a number of relevant stakeholders in order to reach a diverse perspective and maximize the information collected. Interviews with PPS administrators, surveys with patients, and surveys with project– associated providers will be completed once over the course of data collection for each PPS. While it would be helpful to survey non–engaged providers for comparative purposes, this additional survey component is largely not feasible because the PPS will not have accurate contact information for partners from which they are no longer engaged. This component is also outside of the scope of the research questions. These data sources will be used to collect qualitative data on three major focal points: the DSRIP program overall, individual projects, and patient experience. In general, interviews and focus groups will be the major data source of patient satisfaction and experience, and surveys of providers will be the major source of project specific data. These methods of data collection were selected to be able to efficiently and thoroughly address all of the areas of inquiry described in the table below.

| Areas of Inquiry | Interviews with PPS Administrators and Staff | Focus Groups with Providers on Projects | Surveys with Patients | Surveys with Providers on Projects |

|---|---|---|---|---|

| DSRIP Program Overall | ||||

| Program planning, operation, and effectiveness | X | X | X | |

| Program outcomes and challenges | X | X | X | |

| Plans for program sustainability | X | X | ||

| Effectiveness of governance structure and provider linkages | X | X | ||

| Facilitators and barriers to PPS achieving progress on pay–for– reporting/pay–for–performance metrics | X | X | X | |

| Contractual and financial arrangements including provider transformation to Value Based Payments | X | X | X | |

| Challenges in the delivery of patient care | X | X | X | |

| The effect of other ongoing health care initiatives (e.g., New York Prevention Agenda, Affordable Care Act) on DSRIP implementation and operation | X | X | X | |

| Project Specific | ||||

| Progress/effectiveness of projects focused on system transformation | X | X | X | |

| Progress/effectiveness of projects focused on behavioral health | X | X | X | |

| Progress/effectiveness of projects focused on clinical improvement and population | X | X | ||

| Identify the issues that are characteristic of particular strategies or projects (in terms of metrics) | X | X | ||

| Patient Experience | ||||

| Patient satisfaction and experience | X | |||

Prior to collecting data through surveys, focus groups, and interviews, there are a number of preparatory actions that will occur, including identifying participants, preparing protocols, and working with state and local Institutional Review Boards to ensure compliance with human subjects´ requirements.

The IE will work closely with the PPS staff and administrators to identify the appropriate stakeholders needed for interviews and focus groups. PPS will aid the IE by providing lists of names and contact information for appropriate PPS planners and administrators for interviews. In addition, lists of names and contact information (including email addresses) will be sought from PPS identifying relevant providers that are associated with and knowledgeable of each of their DSRIP projects. This information is necessary for the administration of surveys addressing specific projects. Because provider lists are so vast within the PPS, identifying the appropriate stakeholders is important as it will guide recruitment efforts for focus groups, with the goal of recruiting a diverse group of perspectives.

Another preparatory activity is developing question sets and protocols. Because the goal is to hear diverse perspectives on research objectives, numerous questions will be asked from multiple stakeholders to gain a holistic understanding of all areas of inquiry. Question sets will be developed for each method of data collection. Interviews and focus group question sets will be semi–structured, such that all respondents (PPS administrators or providers) will be asked the same questions; however, some items may elicit probing for additional information. Survey items will be selected using existing measures whenever possible to ensure psychometrically rigorous measures are employed. Questions will be developed for any question areas without existing measures. All items will be carefully reviewed and pre–tested, which will ensure that all items are easily understandable and thorough. All data collection protocols will be approved by the Institutional Review Board (IRB) at the SUNY at Albany, for human subjects´ research. Changes to interview and focus group questions may be necessary based on responses during early data collection. Any changes will be carefully reviewed by the IE and approved by the IRB, as needed before use. In additional, all IE staff involved in data collection will be trained on the handling and storage of confidential information.

Once approaches are developed and participants are identified, focus groups, interviews, and surveys will be scheduled and conducted.

| Population | Method | Cycle 1 April 2017 – Dec. 2017 |

Cycle 2 April 2018 – Dec. 2018 |

Cycle 3 April 2019 – Dec. 2020 |

|---|---|---|---|---|

| PPS Administrators | Telephone Interviews |

25 | 25 | |

| PPS Team Leaders | Telephone Interviews |

125 | ||

| DSRIP–Associated Providers | Focus Groups |

8 Groups | 8 Groups | 8 Groups |

| DSRIP–Associated Providers | Web Survey | 2400 w/response rate goal 50–60% | 2400 w/response rate goal 50–60% % | 2400 w/response rate goal 50–60% |

| Patients | Phone/Mail Survey |

CAHPS Survey Data from DY1–5 | ||

C.1. PPS Administrative Key Informant Interviews

Sample Selection

Key informant interviews will be conducted with administrators and staff annually in each of the 25 PPS located throughout the four regions of New York State. In the first year of data collection, interviews will be conducted with PPS administrators. Using purposive sampling (Bryman 2012; Creswell 2013; Patton, 2002), PPS administrators chosen for interviews will be individuals who are most knowledgeable about DSRIP start–up, implementation, ongoing processes, administrative components, and challenges. Specifically, the sample will include the chief executive officer, chief operating officer, or the individual currently responsible for all operations; someone with authority who was involved in PPS startup; the fiscal officer or individual involved in financial transactions; and others identified by either the NYSDOH or the PPS who are vital to the ongoing operations of the PPS. Each PPS had leadership join at different junctures, and many will have leaders with specialized knowledge in certain areas. In the second year of data collection (DSRIP DY4), the research team will schedule interviews with PPS leaders responsible for the implementation and operation of their selected projects. Each PPS has selected up to 11 DSRIP projects from the DSRIP Project Toolkit (e.g., the integration of primary care and behavioral health services, development of community–based health navigation services). These interviews will shed light on factors related to the successful implementation of various DSRIP projects. The sample will include all PPS staff members with professional experience launching or running PPS projects. In the third year of data collection, the research team will again schedule interviews with PPS senior leadership for follow up.

Data Collection Procedures

Telephone interviews will be scheduled at the convenience of the PPS staff and administration and will be conducted with PPS staff and administrators annually in these periods:

- Research Cycle 1 (July – December 2017): Senior Leadership

- Research Cycle 2 (July – December 2018): PPS Staff Responsible for Projects

- Research Cycle 3 (July – December 2019): Senior Leadership

The interviews will be guided by a semi–structured interview protocol and should take no more than two hours to complete. A core set of questions will be asked of all key informants, and a subset of questions and probes will be developed based on each key informant´s roles, knowledge, and responsibilities.

Interviewers will be trained by experienced staff at the Center for Human Services Research who have many years of experience in qualitative interviewing. Trained interviewers will study and review the semi–structured interview protocol at length prior to interviewing to ensure that adequate interview structure is maintained and interviewing is conducted seamlessly. Interviews will be recorded electronically to preserve the content and ensure that each interviewee perspective is accurately captured. Interviews will be transcribed manually during the course of the interview by a research assistant with the Center who will later review the recording and transcribe any missing content.

In the first year of data collection (DSRIP DY3) with the senior leadership team of the PPS, the interview questionnaire will be designed to address the following topics:

- Initial formation of the PPS – exploring the development of the relationships required to form the PPS as well as the project selection.

- Challenges during years 0–2 of DSRIP implementation – exploring launching of the projects, workflow, and engagement with community partners. The IE will also ask about resources required to operate projects.

- Successes during years 0–2 of DSRIP implementation – exploring the application process, project workflow, community partner engagement, and projects.

- Committees – exploring effectiveness of the PPS´ governance related–committees and modifications to the committees over time. Also explores challenges and successes related to committees.

- Data – exploring what specific data (quality, financial, utilization, and/or population health measures) the PPS thinks is most important to evaluating progress and success.

- Account Support – exploring the account support provided by NYS for the PPS and the projects.

- Value based payment – preparatory activities and sustainability plans for the future

- Viewpoint – exploring changes to the healthcare system from DSRIP and other interventions in NY.

- Other issues – comments on areas the IE may have missed.

In the second year of research collection (DSRIP DY4), the research team will schedule telephone interviews with PPS staff responsible for projects. The topics to be discussed in the interview are:

- Initial planning of the projects – exploring effectiveness of project selection and planning.

- Major outcomes and challenges of the projects – exploring project launch, major milestones achieved and missed, barriers to project implementation, and methods barriers were overcome (or plans for overcoming).

- Program sustainability – exploring plans for project sustainability (i.e., continuing projects post–DSRIP).

- Structure and provider linkages on projects – exploring the effectiveness of the project governance structure and provider participation in reaching project milestones.

- Facilitators and barriers to PPS achievement of progress on pay–for–performance metrics related to project milestones – exploring the ways in which PPS are working toward pay– for–performance and the facilitators and barriers for particular projects that are excelling or falling behind on milestones.

- Contractual and financial arrangements – exploring how PPS financial contracts and planning contribute to project milestones and success or failure.

- Changes in the delivery of patient care – exploring the way DSRIP projects have affected the way patients are treated in terms of quality and delivery of care.

- Other ongoing health care initiatives – exploring whether other ongoing initiatives (e.g., NY Prevention Agenda, ACA, Value Based Payments) have had an effect on specific project implementation and operation.

- Progress/effectiveness of projects focused on system and VBP transformation.

- Progress/effectiveness of projects focused on behavioral health.

- Other issues – comments on items we may have missed.

In the third year of data collection, DSRIP DY5, the research team will again schedule interviews with PPS senior leadership. Anticipated topics for the final key informant interviews are:

- Challenges during years 3–5 of DSRIP implementation – explores launching the projects and other workflows including engagement with community partners. We will also ask about resources required to operate projects.

- Successes during years 3–5 of DSRIP implementation – explores project implementation and workflows, and provider and community partner engagement.

- Pay–for–performance – a lookback at the shifts related to pay for performance from DY3 forward.

- Committees – explores effectiveness of the PPS´s governance related–committees and modifications to the committees over time. Also explores challenges and successes related to committees.

- Data – explores what specific data (quality, financial, utilization, and/or population health measures) the PPS thinks is most important to evaluating progress and success.

- Account Support – explores the account support provided by NYS for the PPS and the projects.

- Value based payment – successes and challenges to date.

- Viewpoint – changes to the healthcare system from DSRIP and other interventions in NY and future PPS Sustainability plans.

- Other issues – comments on items we may have missed.

Challenges

There are a number of challenges to key informant research of this scale. First, engaging the study population to participate in interviews may be difficult. The research team is requesting time from busy professionals. The research team will mitigate this challenge in several ways. The IE will craft a well–structured communications plan that carefully lays out what is expected of the PPS professionals at each juncture in terms of content, time, and its impact on their performance. Having this communications plan in place will streamline the interviewing process, instill participant confidence in the researchers´ methods, and increase the likelihood of participation. In addition, researchers will also communicate the extrinsic rewards of participating in the research to interviewees (e.g., input from interviews will be communicated to policy makers who have the power to foster meaningful changes at the system level). The communication plan, combined with a thorough explanation of the extrinsic rewards, will combat the difficulties of participant engagement.

Another challenge is that because the evaluation begins in the middle of the demonstration, there may be difficulties in recall of initial startup and implementation phases of DSRIP. The research team will resolve these challenges by using the first research cycle (operational in DSRIP DY3) to ask retrospective questions on the DSRIP initiative to date to glean a broad characterization of DSRIP process and progress. The questionnaire was designed with this lookback procedure in mind and consequently tailored to contain probing questions to enhance participant recall. We will also recruit individuals who have historical knowledge of the program to the key informant interviews so that recollection is augmented. Retrospective data collection is not ideal, but it is commonly used to capture perceptions of change from participants. In addition, qualitative data for the remaining 2.5 years of the demonstration project will be collected in real time, which will provide context and information regarding both the present operation and planned sustainability of projects.

C.2 Focus Groups with Project–Associated Providers:

Focus groups will be conducted with select project–associated providers. The sample will be selected based on geographic location and provider type. Focus groups function best when groups are somewhat homogenous, which fosters greater cooperation, greater willingness to communicate, and less conflict among group members (Stewart & Shamdasani, 2015). The creation of groups based on provider types ensures that each focus group is comprised of individuals whose work is similar, allowing for more candor and in–depth participation from individuals. Drawing from research on best practices for conducting focus groups, the number of participants for each focus group will be limited to 10–12 individuals; this group size allows participants sufficient time to share insights, yet is large enough to provide a diversity of perspectives. The focus groups will be guided by a focus group category, with questions tailored to each PPS group. Each focus group will last approximately one to 1.5 hours. Focus group participants will be informed of the research protocol regarding confidentiality before the session begins. This includes reporting the findings as a group and not associating anyone with individual remarks. With the permission of the participants, all qualitative focus groups and interviews will be audio–recorded and transcribed verbatim, and field notes will be taken to document the process.

Planned topics for the focus groups include:

- Engagement of providers with DSRIP activities and projects

- DSRIP transformation of professional responsibilities

- Integration of projects with other projects or services received by patients

- Characterization of DSRIP to–date

- The effect of other ongoing healthcare initiatives on DSRIP, such as NY Prevention Agenda and the ACA

- Progress of the DSRIP projects and impact on provider´s area of work

- Factors that influence achieving pay–for–performance

- Barriers that influence achieving pay–for–performance

- Transformative efforts toward Value based payment

- Characterization of the contractual and financial arrangements

- Other changes the project partners would recommend

Challenges

A critical challenge for conducting these focus groups includes establishing a sampling frame that captures the allocation of provider types across PPS groups and counties. The research team developed the hybrid focus of balancing provider types by geographic areas after significant consultation with key leaders at NYSDOH and the DSRIP PPS Account Support team. The hybrid focus will allow researchers to combat these challenge to the utmost extent possible.

A challenge that is inherent to conducting standard focus groups includes difficulty recruiting busy professionals from their demanding clinical responsibilities. Using a communication strategy that includes support from the PPS entities and DOH, the research team will convey information on the benefits of participation to all providers and provide flexible scheduling times, such as early morning or evening times if necessary. Focus groups also face challenges in terms of gathering retrospective data. As the IE is conducting focus groups across three–time points, the IE will only ask lookback questions to the groups held in research collection year 1. To address this challenge, the IE will supplement the data collected via this method by also collecting lookback data from the DSRIP–associated provider survey respondents. The IE will be able to ask more detailed questions about progress, successes, and challenges to date via survey techniques.

C.3 Survey for Patients:

In 2015, in response to the NYSDOH Request for Proposals (RFP), the IE proposed to collect patient surveys. The original evaluation plan described that each PPS would collaborate with researchers to identify patients who were eligible to participate. Planned criteria included patients age 18 and older who had not opted out of DSRIP–related data collection. Research cycle 1 was slated to begin in March 2017 (DSRIP Demonstration Year (DY) 2) and end December 2017 (DSRIP DY3). The survey was planned to repeat for three research cycles, ending in 2019. Planned survey topics included patient satisfaction, reactions to changes to care, and patient experience overall.

NYSDOH is currently fielding a CAHPS survey that will be provided to the IE, rather than requiring the creation and administration of a separate DSRIP–specific survey.

In order to obtain adequate response rates for this difficult–to–reach population, researchers planned on using a hybrid mail and web–based approach. The target sample size for the survey was anticipated to be 1,500 patients surveyed with a response sample of 450 per research cycle.

After a comprehensive review of challenges to an IE–sponsored patient survey and the current data collection burdens on Medicaid members, the IE received approval to perform secondary analysis on the NYSDOH–sponsored Consumer Assessment of Healthcare Providers and Systems (CAHPS©) Health Plan survey for Medicaid enrollees that has been run since DSRIP DY1. After DSRIP was launched the NYSDOH tailored the report to assist NYSDOH and participating PPS in pinpointing opportunities to improve Medicaid members´ experiences. The survey, the CAHPS© C&G Adult Medicaid core survey (Primary Care, version 3.0), is a nationally vetted tool designed to measure patient experiences. The survey was customized to include 18 supplemental questions concerning health literacy, health promotion, and care coordination. The NYSDOH has run the CAHPS © survey each year since year 1 of DSRIP. The survey is sent to 1,500 patients from each of the 25 PPS for a total sample size of 37,500.

The IE´s original evaluation questions for patients were focused on how the patients were experiencing change and their satisfaction with that change. As we now know, since patients do not know that they are in a PPS or part of DSRIP, the scope of the RQs questions has changed. The IE is now interested in reviewing trends and changes to access to care and experiences with care. They are aware that there is no information from before participation in DSRIP or from a control group. As this is an implementation sub–study of the larger IE study, the IE can integrate their findings with the rest of their data without a control group. They will measure change through displays of descriptive statistics from both individual questions and composite measures. They will display the trends from each PPS and statewide.

For questions related to access to primary care, the IE will use:

- Q2. Provider is usual source of care

- Q3. Length of provider relationship is at least 1 year or longer

For questions related to experiences with care, the IE will use:

- Q25. Rating of Provider

- Composite: Getting Timely Appointment, Care, and Information

- Composite: How Well Doctors Communicate with Patients

- Composite: Care Coordination

- Composite: Helpful, Courteous, and Respectful Office Status

Challenges

The IE had always planned to use descriptive statistics for any patient survey data. While they cannot view or analyze data to the individual level from the CAHPS © reports, they can look at the breakdown of composite measures across the state and within each individual PPS. The IE will also provide response rates for each PPS. These data points are appropriate for their planned reports including both the annual statewide and PPS reports as well as the interim and final Independent Evaluator reports slated for 2019 and 2021, respectively.

In addition to the data from these comprehensive, representative surveys, the IE may also explore patient focus groups. The IE would hold six to eight patient focus groups centered around changes from DSRIP project 3.a.i. Integration of primary care and behavioral health services.

Recruitment of these patients is dependent on the PPS staff linking the IE with medical facilities and providers that would be open to hosting focus groups. Development of these groups is also dependent on the rollout of project 3.a.i. and that project´s patient engagement. The IE will work closely with the NYSDOH in DSRIP DY4 to determine feasibility of this approach and the types of data that would be appropriate to collect from the consumer facing group. The IE may also request to review findings from ongoing STC–required Consumer Education Campaign focus groups that the NYSDOH is running in complementary efforts.

Survey for Project Providers

C.4 Electronic Survey of Project–Associated Providers Sample Selection

In order to gather uniform information on the functioning of individual projects, an electronic survey will be administered annually to project–associated providers. The sample will be drawn from lists maintained by PPS administrators of providers who are associated with each of their projects and known as "engaged providers." The IE anticipates the survey will target 2,400 providers annually, a number that is based upon response rates in past research with health care professionals that have generally yielded response rates between 50 and 60% (McLeod et al. 2013; Nielsen et al., 2009; Podichetty et al., 2006). A sample of 1,200 health care providers will allow researchers to examine the data by various subgroups (e.g., provider type) and allow for analyses based on geographic location. Researchers at the Center for Human Services Research are immersed in the literature on best practices in survey collection, have extensive experience in this area, and have specifically investigated approaches for maximizing participation in electronic surveys among health care professionals to ensure an adequate sample is achieved (e.g., McLeod et al. 2013).

Data Collection Procedures

Surveys will be conducted with DSRIP–associated providers once per year in the following periods:

- Research Cycle 1 (July – December 2017)

- Research Cycle 2 (July – December 2018)

- Research Cycle 3 (July – December 2019)

The electronic survey will utilize Qualtrics Survey Software to ensure accurate data capture and preserve participants´ responses in a confidential manner. Qualtrics Survey Software is known for its elegant design that will mitigate any difficulties that generally arise in web navigation with electronic surveys. In addition, the survey length will be as short as possible while collecting all relevant information so as to encourage participant responses and reduce respondent fatigue.

The link to the survey designed in Qualtrics will be emailed to individuals from the list of engaged providers. The sample of engaged providers will be developed from the DSRIP MAPP Provider Import Tool and its hybrids used by each PPS. Contact information may need to be validated from a second contact database but the Provider Import Tool or the similar tool being used by the PPS will be the determination of how providers are designated as "engaged." Providers will have ample time to respond and gentle reminder follow up emails will be sent to encourage providers who have not yet participated to complete the survey.

The survey questions will focus specifically on progress within individual projects, barriers and facilitators to project implementation, and perceived effectiveness. The survey will generate user–based responses that will allow the IE to provide individualized feedback to each PPS for quality improvement of their projects (Bate & Robert, 2007). Topics will include:

- Service provision within each project dimension

- Project operation compared to the planned model and reflection of this change over implementation years

- Future anticipated changes to project models

- Factors of each project that have helped or hindered with implementation

- Challenges faced in working with the PPS entities

- Challenges faced with specific projects and corrective actions (if any)

- Changes to project(s) or DSRIP operation

- Level of satisfaction with planning process

- Reflections on what worked well and less well during the planning process

- Value based payment readiness and change

- Changes to program planning processes for specific projects

- Satisfaction with current operation

- Overall perception of DSRIP

- Overall perception of projects

Project Providers Survey Challenges

One major challenge to survey data collection includes identification of the sample. The research team will work with NYSDOH and the DSRIP Account Support team to develop a method to pull the sample and ensure its accuracy. It is anticipated that the sample will be pulled by the research team manually from the MAPP Network tool. The challenge with sample identification in this case is that it will require collaboration with these entities as well as the PPS to identify potential providers who will participate; however, the research team at the Center for Human Services Research is poised to meet this challenge based on the team´s extensive experience in coordinating data collection endeavors of this nature through other quantitative research projects. This challenge will be mitigated both through the experience of the research team as well as the planning that went into utilizing the MAPP Network tool to pull the sample.

A second anticipated challenge is accurate categorization of provider type. Upon receipt of feedback from the PPS entities during the DSRIP Mid–Point Assessment, NYSDOH allowed PPS to broaden their own categorization tools in early 2017. This tool replaces the Provider Import Tool (PIT) and allows for greater customization. As not every PPS will broaden provider categorization. The survey must be designed to collect responses on categorization type and should mirror the language that the PPS entities use to define their providers. Another challenge is that providers may engage with multiple PPS entities on the same or different projects. The survey will be designed to allow for separate responses for project questions per PPS entity.

C. 5 Other Data Collection

To reflect the real–world nature of this evaluation and to gather data from all stakeholders, the IE will explore the addition of other surveys or interviews.

Managed Care Representatives – The IE will explore the addition of a survey with managed care representatives in DSRIP DY5. The sample would include representatives from the 18 mainstream plans. Topics to be covered in the survey include successes and challenges of DSRIP related initiatives to date, engagement with PPS and transformative efforts of DSRIP toward managed care plan value based payment contracting.

Project Approval and Oversight Panel (PAOP) – The IE will survey the members of the Project Approval and Oversight Panel in DSRIP DY4 to gather their perspectives on the implementation and process progress of DSRIP. They will also collect their feedback and suggestions.

C.6 Implementation/Process Analysis Summary

Analysis will focus on identifying usable feedback for improvement for each of the 25 PPS. An additional focus will be identifying common and unique themes that arise in the data to inform the evaluation of DSRIP implementation as a whole. Any quantitative survey data will be analyzed using SPSS statistical software. The qualitative data obtained through key informant interviews, focus groups, and open–ended survey questions will be transcribed and analyzed, using a qualitative data software program.

Coding and analysis of qualitative data will follow the strategies described by Bradley, Curry and Devers (2007). Once data are organized and reviewed, the IE will use an integrated approach to identify and categorize the data according to concepts, relationships between concepts, and evaluative participant perspectives. Categorization based on setting and participant characteristics will also be completed, as appropriate. This categorization process facilitates the development of taxonomies, themes and theory, and comparisons. Responses will then be reviewed independently by at least two IE staff utilizing the finalized coding structure. Any coding discrepancies between reviewers will be resolved with discussion to achieve consensus. Coded data will be analyzed and interpreted to identify major concept domains and themes. Analysis will focus on understanding of the DSRIP initiative as a whole, as well as on understanding of each individual PPS.

Progress on qualitative data collection and analysis will be included in quarterly progress reports, as well as any changes in implementation strategies that have occurred based on feedback to the PPS and project sites. In addition, results from the qualitative data analysis will be reported in the overall annual reports. Information on individual PPS will be presented in annual case study reports to each PPS to be used to guide quality improvement through project refinements and enhancements. Qualitative data will also contribute to the interim and final summative reports.

|top of Section C.| |top of page|Section D:

Comparative Analysis:

To address questions pertaining to the effects of type of projects adopted by the PPS, the relative effectiveness of specific strategies employed within project types, and the contextual factors associated with PPS success or failure to demonstrate improvement in the metrics associated with each domain, quantitative and qualitative comparatives may include the following:

- Where there is variation in the strategies selected per the PPS project requirements described in the STC above, assess the effect on the pertinent outcome of PPS having selected a particular strategy. For example, a comparison would be made in the improvement in diabetes care (Domain 2) between PPS that implement a project to address this issue and PPS that do not.

- The relative effectiveness of particular projects intended to produce the same outcome. For example, among PPS that opt for a strategy to improve asthma care, compare such improvement between those PPS that chose to implement a project to expand asthma home–based self–management programs to those PPS that chose alternative projects to improve asthma care.

- Identification common to those PPS receiving or not receiving maximum payment based on project valuation.

- Comparisons between PPS operating in different regions of New York to identify successes and challenges associated with local resources or procedures.

- Patient–level comparisons by factors such as age, sex, race, presence of selected chronic conditions, and mental health/substance abuse status to obtain information on variations in service experience and satisfaction under DSRIP, by patient characteristics.

The comparative analysis will be designed by the IE to address the seven (7) research questions (RQ)(see Section B.2). The IE´s approach is to apply quantitative techniques to assess relative PPS performance on domain–specific metrics over time, and supplement this work with qualitative data collection to provide further contextualization of the findings. Specifically, the IE will supplement their quantitative analyses of publicly available data sets by analyzing other primary data, such as 1) focus groups, 2) semi–structured key informant interviews with PPS administrators and staff, 3) surveys of providers with semi–structured interview follow–up, and 4) surveys with patients, to provide further contextualization of results. The approach will include clustering PPS to create comparison groups according to project selections, the uses of DID methodology, as well as multi–level modeling.

Further the IE will develop a compendium of domain projects across all DSRIP PPS that includes information important to the comparative analysis. The compendium will include information on timeline (start and end dates of implementation), planning decisions (changes that occurred prior to implementation or during implementation), fidelity of the intervention to its original intent (ranked low to high), relative success to internal expectations (low to high), and previous work (was the program new or building upon existing, pre–DSRIP activity). This compendium will allow the IE to examine variation between PPS within projects and across domains in a way that will contribute to the IE´s understanding of DSRIP and exploit less apparent differences between the programs and projects to drive analyses. For example, if two projects look the same "on paper" but one is new and one is based upon existing initiative, the IE might see differential outcomes (if the IE is looking at change over time).

The comparative analysis will be designed to address the seven RQs with specific emphasis on the five specific issues in this section above. The research aims for comparative analysis are:

- To compare PPS performance on domain–specific metrics for those that did/did not adopt specific DSRIP projects.

- To evaluate the relative effectiveness of specific strategies employed within specific projects.

- To examine contextual factors related to PPS successes and failures in demonstrating improvement in domain–specific metrics.

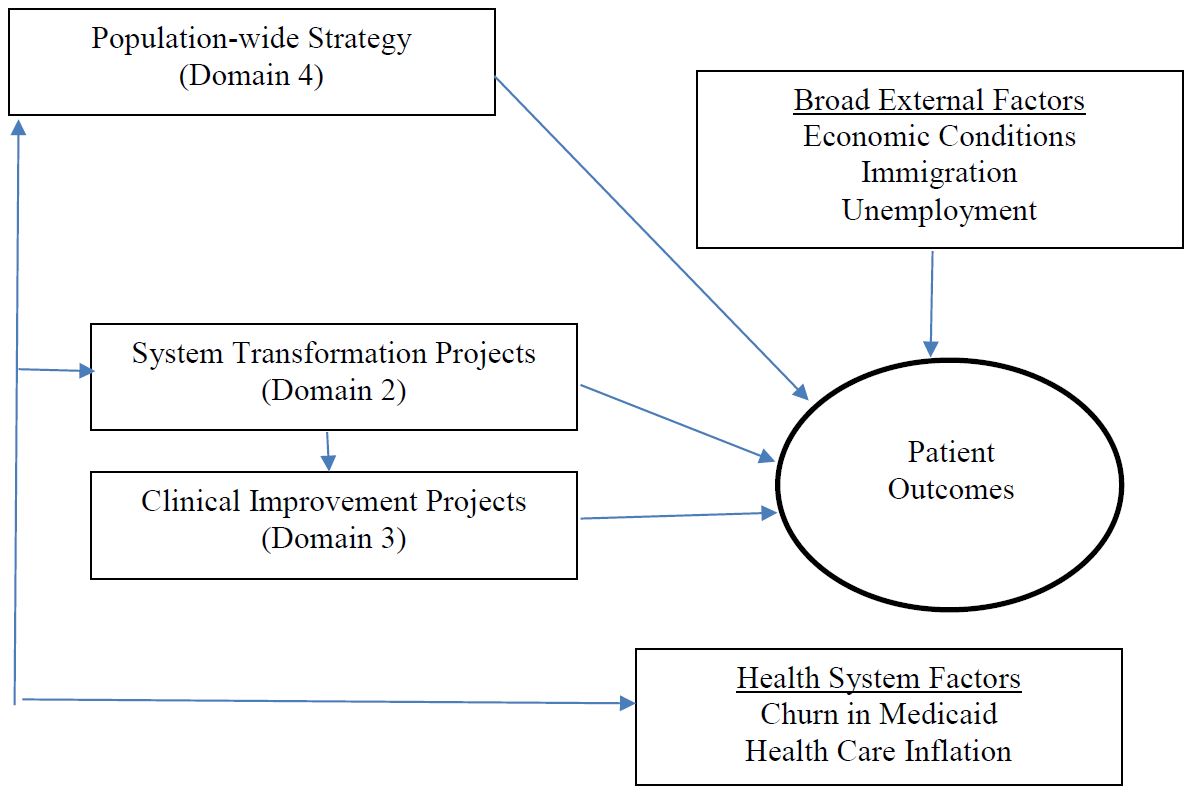

The conceptual framework below depicts the factors that are expected to impact health outcomes in the broader context of the DSRIP program. System Transformation (Domain 2), Clinical Improvement (Domain 3), and Population–wide Strategies (Domain 4) are all anticipated to impact patient–level outcomes. Moreover, broad external factors, such as economic conditions, immigration, and unemployment, are also likely to influence patient outcomes. To this point, issues related to beneficiary eligibility and the frequency of patients going in and out of the Medicaid system tend to play a role in influencing health outcomes. In addition, the varying performance levels and culture related to organizations that are early adopters versus late adopters of DSRIP projects and strategic initiatives also are likely to play a role in determining patient–level outcomes.

Conceptual Framework:

Evaluating DSRIP, given the multiple PPS networks, partnerships, and projects within each domain, is a complex endeavor. The IE will leverage both qualitative and quantitative data to inform the evaluation design by embracing the variation across and within PPS interventions and the varied goals of each.

Early analyses will focus on the direct relationship between domain projects and the ultimate outcome measure. Analyses will be descriptive in nature when examining broader PPS outcomes, but additional multivariate analysis will be used to control for differences between populations, regions, providers, and other characteristics of the PPS that exist beyond the intervention or within the intervention project.

Descriptive Analysis Example for Domain 2 Impact on Emergency Department Visits:

| PCMH/Advanced Primary Care (N=5) | → | Reduction in ED Use per 1,000 visits (%) |

| Integrated Delivery System (N=22) | → | Reduction in ED Use per 1,000 visits (%) |

In the example above, the underlying hypotheses are that specific Domain 2 projects will result in reductions in the percentage of emergency department (ED) visits per 1,000 total visits over time (from pre–DSRIP to post–DSRIP) in aggregate. Testing this hypothesis will simply use the inventory of DSRIP projects across PPS and use descriptive statistics to understand if the percent change in ED visit use was reduced in the five PPS that had a Patient Centered Medical Home (PCMH)/Advanced Primary Care intervention when compared to sites without a PCMH/Advanced Primary Care intervention, and separately calculate whether the 22 PPS with an integrated delivery system intervention experienced a reduction in ED visits when compared to those without an integrated delivery system intervention. These descriptive tables will give a general sense of what happened for the groups of sites that opted into a specific Domain project versus those that did not, but does not address multiple interventions in the same domain or control for underlying PPS characteristics. The unit of analysis will be the PPS site and data will be pulled from the PPS project list and administrative records (Medicaid claims for ED visits) and/or PPS Quarterly Implementation Project Plan Reports (from the PPS to NYSDOH). The resulting table is likely to appear in the evaluation report in the following format:

Example Output for Bivariate Analysis by Project:

| Domain 2 Project | Number of PPS participants | Measure 1: Percentage Change in ED Visits per 1,000 | |||||

|---|---|---|---|---|---|---|---|

| Baseline Rate | Year 1 | Year 2 | Year 3 | Year 4 | Year 5 | ||

| 1. Integrated Delivery System | 22 | 1.3 per 1,000 visits | −0.2 | −0.3 | −0.4 | −0.3 | −0.5 |

| 2. PCMH/Advanced Primary Care | 5 | 1.1 per 1,000 visits | −0.1 | −0.2 | −0.3 | −0.2 | −0.4 |

The second stage of descriptive analysis will focus on interactions between Domains and Projects between PPS networks, to better understand the impact of the customizability and flexible nature of the DSRIP interventions the IE is tasked with evaluating. The additive relationship of implementing a PCMH/Advanced Primary Care project along with an integrated delivery system project can be better understood and incorporated into the evaluation approach. The table below is likely to appear in the evaluation report in the following format:

Example Output for Bivariate Analysis by Project Combinations:

| Domain 2 Project | Number of PPS participants | Measure 1: Percentage Change in ED Visits per 1,000 | |||||

|---|---|---|---|---|---|---|---|

| Pre− DSRIP Rate | Year 1 | Year 2 | Year 3 | Year 4 | Year 5 | ||

| 1. Integrated Delivery System only | 19 | 1.2 per 1,000 visits | −0.2 | −0.3 | −0.4 | −0.3 | −0.5 |

| 2. PCMH/Advanced Primary Care only | 2 | 1.0 per 1,000 visits | −0.1 | −0.2 | −0.3 | −0.2 | −0.4 |

| 2 & 3. PCMH/Advanced Primary Care + Integrated Delivery System | 3 | 1.4 per 1,000 | −0.2 | −0.2 | −0.3 | −0.4 | −0.5 |